GCP AI Fundamentals - AIML Series 3 - TensorFlow

Introduction to TensorFlow

TensorFlow, an open-source library developed by Google Brain, is a cornerstone for machine learning and AI tasks. Here's an in-depth look at its fundamental aspects:

- Flexibility and Scalability: TensorFlow supports various platforms (CPUs, GPUs, TPUs) and can scale from mobile devices to large clusters.

- Community Support: A vast community and extensive resources, including tutorials, pre-trained models, and research papers, enhance learning and problem-solving.

- Wide Application: From research to production, TensorFlow is used for diverse applications such as natural language processing, computer vision, and reinforcement learning.

TensorFlow’s comprehensive suite of tools and libraries empowers developers to build and deploy machine learning models efficiently, making it an invaluable resource for AI practitioners.

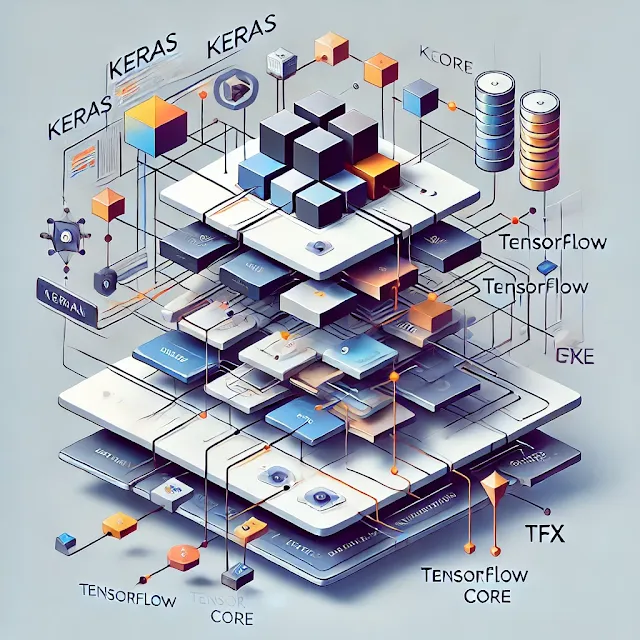

TensorFlow API Hierarchy and Components

The API hierarchy in TensorFlow is designed to cater to different levels of abstraction, providing flexibility and ease of use:

- Core API: This includes low-level operations on Tensors (multidimensional arrays), allowing fine-grained control over model computations.

- High-Level API (tf.keras): Built on top of the Core API, tf.keras simplifies the creation, training, and evaluation of models through a high-level, user-friendly interface.

- Ecosystem APIs: TensorFlow includes specialized libraries for different tasks, such as TensorFlow Extended (TFX) for production machine learning, TensorFlow Lite for mobile and embedded devices, and TensorFlow.js for machine learning in JavaScript.

Key Components:

- Tensors and Operations: Fundamental units for data representation and mathematical computations.

- Graphs and Sessions: Graphs represent computational tasks, while sessions execute these graphs.

- Models and Layers: Abstract constructs for defining and manipulating neural networks.

Understanding this hierarchy and its components is crucial for efficient TensorFlow usage, enabling seamless transitions between different levels of abstraction based on project requirements.

Training Large Datasets with tf.data API

Efficient handling and processing of large datasets are vital for robust model training. The tf.data API facilitates this by providing a flexible, high-performance pipeline for data manipulation:

- Dataset API: Allows the creation of complex input pipelines from simple, reusable pieces.

- Prefetching and Caching: Improves pipeline performance by overlapping data preprocessing and model execution.

- Parallelism: Utilizes multi-core processors to parallelize data loading and preprocessing, significantly speeding up the training process.

Steps for Effective Dataset Management:

- Loading Data: From various sources such as CSV files, databases, or TFRecords.

- Transforming Data: Applying operations like batching, shuffling, and mapping to preprocess the data.

- Optimizing Performance: Strategies such as interleaving, parallel map, and prefetching enhance data pipeline efficiency.

Implementing these steps ensures that the training process is not bottlenecked by data input, thus maintaining high throughput and reducing training time.

Working In-Memory and with Files

Effective data handling, whether in-memory or with files, is crucial for the smooth functioning of machine learning workflows:

- In-Memory Data: Provides quick access and is useful for small datasets or when data fits comfortably in memory.

- File-Based Data: Essential for handling large datasets that exceed memory capacity. Techniques like memory mapping and streaming data help manage this efficiently.

- Hybrid Approach: Balances memory usage and access speed, using in-memory operations for critical tasks and file-based operations for bulk data.

Common Techniques:

- Memory Mapping: Enables efficient access to large files by mapping file contents directly into memory.

- Streaming Data: Processes data in real-time, ideal for scenarios like continuous data flow from sensors.

- Data Partitioning: Splits large datasets into smaller, manageable chunks to balance memory load and processing speed.

These techniques ensure efficient data handling, crucial for maintaining performance and scalability in machine learning projects.

Getting Data Ready for Model Training

Proper data preparation is a fundamental step in the machine learning pipeline, impacting model performance and accuracy:

- Cleaning and Preprocessing: Handling missing values, outliers, and data normalization ensures the data is in a suitable format for training.

- Feature Engineering: Creating meaningful features from raw data can significantly enhance model performance.

- Data Augmentation: Expanding datasets through techniques like rotation, flipping, and cropping, especially useful in image processing tasks.

Essential Preprocessing Steps:

- Normalization: Scaling features to a standard range improves model convergence during training.

- Categorical Encoding: Converts categorical variables into numerical values, making them suitable for model input.

- Splitting Data: Dividing data into training, validation, and test sets ensures proper model evaluation and prevents overfitting.

A well-prepared dataset leads to more accurate and reliable model training, forming the backbone of successful machine learning projects.

Embeddings

Embeddings are a powerful technique for representing data in a lower-dimensional space, capturing semantic relationships:

- Word Embeddings: Represent words as dense vectors, capturing semantic meaning and relationships. Techniques like Word2Vec and GloVe are commonly used.

- Learned Embeddings: Generated during model training, these embeddings are optimized for specific tasks, such as recommendation systems.

- Applications: Widely used in natural language processing (NLP), recommendation systems, and other tasks requiring a compact representation of high-dimensional data.

Creating Embeddings:

- Pre-trained Models: Utilize existing embeddings trained on large corpora, such as Word2Vec, GloVe, or FastText.

- Custom Embeddings: Train embeddings on your specific dataset, allowing for task-specific optimization.

Embeddings facilitate efficient and effective representation of complex data, enhancing the performance of various machine learning models.

Building Neural Networks with TensorFlow and Keras API

Building and training neural networks with TensorFlow and the Keras API is streamlined and efficient:

- Activation Functions: Key to introducing non-linearity in neural networks, commonly used functions include Sigmoid, ReLU, and Tanh.

- Model Architectures: TensorFlow supports both the Sequential API for simple, linear stacks of layers and the Functional API for complex, non-linear models.

- Training Process: Involves compiling the model (selecting optimizers, loss functions, and metrics) and fitting it to the data.

Steps to Build a Neural Network:

- Define Model: Use Keras layers to construct the model architecture.

- Compile Model: Select appropriate optimizer (e.g., Adam, SGD) and loss function (e.g., binary cross-entropy, categorical cross-entropy).

- Train Model: Fit the model to the training data, monitor performance, and adjust hyperparameters as needed.

These steps ensure that neural networks are built and trained efficiently, leveraging the power of TensorFlow and Keras for high-performance machine learning.

Serving Models in Cloud

Deploying trained models in the cloud enables scalable, production-grade machine learning services:

- Model Serving: TensorFlow Serving or cloud services like Google Cloud AI Platform provide robust solutions for deploying models.

- APIs and Endpoints: Expose models via RESTful APIs, enabling integration with various applications.

- Scalability: Cloud infrastructure handles multiple requests efficiently, ensuring high availability and low latency.

Key Considerations:

- Latency and Throughput: Optimize the infrastructure to handle real-time requests efficiently.

- Security: Ensure data privacy and secure access to the deployed models.

- Monitoring: Track model performance, detect anomalies, and gather usage statistics to maintain and improve service quality.

Cloud-based model serving ensures that machine learning models are accessible, scalable, and maintainable, facilitating their integration into various applications and services.

Model Subclassing

Subclassing in TensorFlow provides flexibility for defining custom models and layers:

- Custom Layers and Models: Allow for unique architectures tailored to specific tasks.

- Override Methods: Customize training loops, inference processes, and layer behavior.

- Hybrid Models: Combine standard layers with custom components to achieve desired functionality.

Subclassing Example:

- Define a Class: Inherit from tf.keras.Model or tf.keras.layers.Layer.

- Override init and call Methods: Implement custom logic for initialization and forward pass.

Subclassing empowers developers to create highly customized models, enabling more fine-grained control over model behavior and training processes.

Regularization Basics

Regularization techniques are essential to prevent overfitting and improve model generalization:

- L1 and L2 Regularization: Add penalties to the loss function based on the magnitude of model weights, encouraging simpler models.

- Dropout: Randomly disable neurons during training to prevent over-reliance on specific neurons, enhancing model robustness.

- Early Stopping: Halt training when validation performance stops improving, preventing overfitting.

Applying Regularization:

- Weight Penalties: Include L1 or L2 regularization terms in the loss function.

- Dropout Layers: Integrate dropout into the network architecture.

- Early Stopping Callbacks: Monitor validation performance and stop training when performance plateaus.

Regularization techniques help maintain a balance between model complexity and performance, ensuring that models generalize well to unseen data.

Measuring Model Complexity

Understanding and measuring model complexity is crucial for balancing performance and efficiency:

- Parameters Count: Total number of trainable weights in the model.

- Depth and Width: Number of layers (depth) and neurons per layer (width).

- Training Time: Often correlates with model complexity, impacting resource usage and scalability.

Balancing Complexity:

- Model Size vs. Performance: Find an optimal balance where the model performs well without being overly complex.

- Pruning: Remove unnecessary weights or neurons to reduce model size while maintaining performance.

- Quantization: Lower the precision of weights and activations to speed up inference and reduce memory footprint.

Balancing model complexity ensures efficient use of resources and maintains high performance, crucial for deploying models in resource-constrained environments.

Training at Scale with Vertex AI

Vertex AI, Google’s managed machine learning platform, facilitates large-scale training and deployment:

- Distributed Training: Parallelize training across multiple nodes, accelerating the training process.

- AutoML: Automate model building and hyperparameter tuning, reducing the need for manual intervention.

- Managed Services: Simplify infrastructure management, allowing developers to focus on model development.

Vertex AI Features:

- Custom Training: Use your code and models, leveraging Vertex AI’s infrastructure.

- Hyperparameter Tuning: Optimize model performance through automated searches for the best hyperparameters.

- Model Monitoring: Continuously track deployed model metrics, ensuring ongoing performance and reliability.

Vertex AI provides a comprehensive solution for scaling machine learning workflows, enabling efficient training and deployment of high-performance models.

Conclusion

Mastering TensorFlow and its extensive ecosystem equips developers with the tools needed to create robust AI solutions, from experimental research to full-scale production deployments. Leveraging advanced tools like Vertex AI streamlines workflows, allowing for efficient, scalable, and high-performance machine learning models. By understanding these fundamentals, you can harness the full potential of TensorFlow and Google Cloud Platform in your AI projects.

%20and%20recurrent%20neural%20networks%20(RNNs)%20with%20labels.%20Show%20elements%20representing%20the%20uniq.webp)

Comments